- Nasira – A FreeNAS build

- Nasira – Part II

- Nasira – Part III

- Nasira with a little SAS

- Moving Nasira to a different chassis

- When a drive dies

- Adding an SLOG… or not

- Coming full circle

- Throwing a short pass

- More upgrades but… no more NVMe

- Check your mainboard battery

With the last update, I mentioned upgrading from 990FX to X99 with the intent of eventually adding an NVMe carrier card and additional NVMe drives so I could have metadata vdevs. And I’m kind of torn on the additional NVMe drives.

No more NVMe?

While the idea of a metadata vdev is enticing, since it – along with a rebalance script – aims to improve response times when loading folders, there are three substantial flaws with this idea:

- it adds a very clear point of failure to your pool, and

- the code that allows that functionality hasn’t existed for nearly as long, and

- the metadata is still cached in RAM unless you disable read caching entirely

Point 1 is obvious. You lose the metadata vdev, you lose your pool. So you really need to be using a mirrored pair of NVMe drives for that. This will enhance performance even more, since reads should be split between devices, but it also means additional expense. Since you need to use a special carrier card if your mainboard doesn’t support bifurcation, at nearly 2.5x the expense of carrier cards that require bifurcation.

But even if it does, the carrier cards still aren’t inexpensive. There are alternatives, but they aren’t that much more affordable and only add complication to the build.

Point 2 is more critical. To say the special vdev code hasn’t been “battle tested” is… an understatement. Special vdevs are a feature first introduced to ZFS only in the last couple years. And it probably hasn’t seen a lot of use in that time. So the code alone is a significant risk.

Point 3 is also inescapable. Unless you turn off read caching entirely, the metadata is still cached in RAM or the L2ARC, substantially diminishing the benefit of having the special vdev.

On datasets with substantially more cache misses than hits – e.g., movies, music, television episodes – disabling read caching and relying on a metadata vdev kinda-sorta makes sense. Just make sure to run a rebalance script after adding it so all the metadata is migrated.

But rather than relying on a special vdev, add a fast and large L2ARC (2TB NVMe drives are 100 USD currently), turn primary caching to metadata only and secondary caching to all. Or if you’re only concerned with ensuring just metadata is cached, set both to metadata only.

You should also look at the hardware supporting your NAS first to see what upgrades could be made to help with performance. Such as the platform upgrade I made from 990FX to X99. If you’re sitting on a platform that is severely limited in terms of memory capacity compared to your pool size – e.g. the aforementioned 990FX platform, which maxed out at 32GB dual-channel DDR3 – a platform upgrade will serve you better.

And then there’s tuning the cache. Or even disabling it entirely for certain datasets.

Do you really need to have more than metadata caching for your music and movies? Likely not. Music isn’t so bandwidth intense that it’ll see any substantial benefit from caching, even if you’re playing the same song over and over and over again. And movies and television episodes are often way too large to benefit from any kind of caching.

Photo folders will benefit from the cache being set to all, since you’re likely to scroll back and forth through a folder. But if your NAS is, like mine, pretty much a backup location and jumping point for offsite backups, again you’re likely not going to gain much here.

You can improve your caching with an L2ARC. But even that is still a double-edged sword in terms of performance. Like the SLOG, you need a fast NVMe drive. And the faster on random reads, the better. But like with the primary ARC, datasets where you’re far more likely to have cache misses than hits won’t benefit much from it.

So then with the performance penalty that comes with cache misses, is it worth the expense trying to alleviate it based on how often you encounter that problem?

For me, it’s a No.

And for most home NAS instances, the answer will be No. Home business NAS is a different story, but whether it’ll benefit from special devices or an L2ARC is still going to come down to use case. Rather than trying to alleviate any performance penalty reading from and writing to the NAS, your money is probably better spent adding an NVMe scratch disk to your workstation and just using your NAS for a backup.

One thing I think we all need to accept is simply that not every problem needs to be solved. And in many cases, the money it would take to solve a problem far overtakes what you would be saving – in terms of time and/or money – solving the problem. Breaking even on your investment would likely take a long time, if that point ever comes.

Sure pursuing adding more NVMe to Nasira would be cool as hell. I even had an idea in mind of building a custom U.2 drive shelf with one or more IcyDock MB699VP-B 4-drive NVMe to U.2 enclosures – or just one to start with – along with whatever adapters I’d need to integrate that into Nasira. Or just build a second NAS with all NVMe or SSD storage to take some of the strain off Nasira.

Except it’d be a ton of time and money acquiring and building that for very little gain.

And sure, having a second NAS with all solid-state storage for my photo editing that could easily saturate a 10GbE trunk sounds great. But why do that when a 4TB PCI-E 4.0×4 NVMe drive is less than 300 USD, as of the time of this writing, with several times the bandwidth of 10GbE? Along with the fact I already have that in my personal workstation. Even PCIE-3.0×4 NVMe outpaces 10GbE.

A PXE boot server is the only use case I see with having a second NAS with just solid-state storage. And I can’t even really justify that expense since I can easily just create a VM on Cordelia to provide that function, adding another NVMe drive to Cordelia for that storage.

Adding an L2ARC might help with at least my pictures folder. But the more I think about how I use my NAS, the more I realize how little I’m likely to gain adding it.

More storage, additional hardware upgrades

Here are the current specs:

CPU: Intel i7-5820k with Noctua NH-D9DX i4 3U cooler

RAM: 64GB (8x8GB) G-Skill Ripjaws V DDR4-3200 (running at XMP)

Mainboard: ASUS X99-PRO/USB 3.1

Power: Corsair RM750e (CP-9020262-NA)

HBA: LSI 9400-16i with Noctua NF-A4x10 FLX attached

NIC: Mellanox ConnectX-3 MCX311A-XCAT with 10GBASE-SR module

Storage: six (6) mirrored pairs totaling 66TB effective

SLOG: HP EX900 Pro 256GB

Boot drive: Inland Professional 128GB SSD

So what changed from previous?

First I upgraded the SAS card from the 9201-16i to the 9400-16i. The former is a PCI-E 2.0 card, while the latter is PCI-E 3.0 and low-profile – which isn’t exactly important in a 4U chassis.

After replacing the drive cable harness I mentioned in the previous article, I still had drive issues that arose on a scrub. Thinking the issue was the SAS card, I decided to replace it with an upgrade. Turns out the issue was the… hot swap bay. Oh well… The system is better off, anyway.

Now given what I said above, that doesn’t completely preclude adding a second NVMe drive as an L2ARC – just using a U.2 to NVMe enclosure – since I’m using only 3 of the 4 connectors. The connectors are tri-mode, meaning they support SAS, SATA, and U.2 NVMe. And that would be the easier and less expensive way of doing it.

This also opens things up for better performance. Specifically during scrubs, given the growing pool. I also upgraded the Mellanox ConnectX-2 to a ConnectX-3 around the same time as the X99 upgrade to also get a PCI-E 3.0 card there. And swap it from a 2-port card down to a single port.

The other change is swapping out the remaining 4TB pair for a 16TB pair. I don’t exactly need the additional storage now. Nasira wasn’t putting up any warnings about storage space running out. But it’s better to say ahead of it, especially since I just added another about 1.5TB to the TV folder with the complete Frasier series and a couple other movies. And more 4K upgrades and acquisitions are coming soon.

One of the two 4TB drives is also original to Nasira, so over 7 years of near-continuous 24/7 service. The 6TB pairs were acquired in 2017 and 2018, so they’ll likely get replaced sooner than later as well merely for age. Just need to keep an eye on HDD prices.

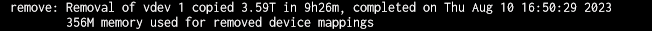

To add the new 16TB pair, I didn’t do a replace and resilver on each drive. Instead I removed the 4TB pair – yes, you can remove mirror vdevs from a pool if you’re using TrueNAS SCALE – and added the 16TB pair as if I was expanding the pool. This cut the time to add the new pair to however long was needed to remove the 4TB pair. If I did a replace/resilver on each drive, it would’ve taken… quite a bit more than that.

Obviously you can only do this if you have more free space than the vdev you’re removing – I’d say have at least 50% more. And 4TB for me was… trivial. But that obvious requirement is likely why ZFS doesn’t allow you to remove parity vdevs – i.e. RAID-Zx. It would not surprise me if the underlying code actually allows it, with a simple if statement cutting it off for parity vdevs. But how likely is anyone to have enough free space in the pool to account for what you’re removing? Unless the pool is brand new or nearly so… it’s very unlikely.

It’s possible they’ll enable that for RAID-Zx at some point. But how likely is someone to take advantage of it? Whereas someone like me who built up a pool one mirrored pair at a time is a lot more likely to use that feature for upgrading drives since it’s a massive time saver, meaning an overall lesser risk compared to replace/resilver.

But all was not well after that.

More hardware failures…

After installing the new 16TB pair, I also updated TrueNAS to the latest version only to get… all kinds of instability on restart – kernel panics, in particular. At first I thought the issue was TrueNAS. And that the update had corrupted my installation. Since none of the options in Grub wanted to boot.

So I set about trying to reinstall the latest. Except the install failed to start up.

Then it would freeze in the UEFI BIOS screen.

These are signs of a dying power supply. But they’re actually also signs of a dying storage device failing to initialize.

So I first replaced the power supply. I had more reason to believe it was the culprit. The unit is 10 years old, for starters, and had been in near-continuous 24/7 use for over 7 years. And it’s a Corsair CX750M green-label, which is known for being made from lower-quality parts, even though it had been connected to a UPS for much of its near-continuous 24/7 life.

But, alas, it was not the culprit. Replacing it didn’t alleviate the issues. Even the BIOS screen still froze up once. That left the primary storage as the only other culprit. An ADATA 32GB SSD I bought a little over 5 years ago. And replacing that with an Inland 128GB SSD from Micro Center and reinstalling TrueNAS left me with a stable system.

That said, I’m leaving the new RM750e in place. It’d be stupid to remove a brand. new. power supply and replace it with a 10 year-old known lesser-quality unit. Plus the new one is gold rated (not that it’ll cut my power bill much) with a new 7-year warranty, whereas the old one was well out of warranty.

I also took this as a chance to replace the rear 80mm fans that came with the Rosewill chassis with beQuiet 80mm Pure Wings 2 PWM fans, since those had standard 4-pin PWM power instead of needing powered direct from the power supply. Which simplified wiring just a little bit more.

Next step is replacing the LP4 power harnesses with custom cables from, likely, CableMod, after I figure out measurements.

You must be logged in to post a comment.