- Nasira – A FreeNAS build

- Nasira – Part II

- Nasira – Part III

- Nasira with a little SAS

- Moving Nasira to a different chassis

- When a drive dies

- Adding an SLOG… or not

- Coming full circle

- Throwing a short pass

- More upgrades but… no more NVMe

- Check your mainboard battery

Responses to my previous iteration in this build log led to me digging up some information that could prove useful to those who want to build a NAS. As I said in the previous, if your spare hardware doesn’t support ECC, then don’t really fret too much about it. There are ways to detect and avoid data corruption that I’m sure many don’t really think about, but which come in handy for backing up data that is very important. I’ll get to that later.

First, let’s talk about hardware.

Power supply. A commenter on the Linus Tech Tips thread where I’m cross-posting this build log called into question my choice of power supply:

Only real bit of input I have is a suggestion at looking at a more efficient power supply for a NAS, while not crucial, it’d be nice to get better inefficiencies [sic] wherever possible.

On several forums, the CX line of Corsair power supplies gets a bad rap. And if I didn’t already have the CX750M, I would’ve selected something else. The CX750M is bronze rated, so I’d prefer something with a better 80+ rating and better reputation. But since I already have it, and I don’t need it for anything else, I might as well use it. This project is really another step in a goal of putting spare hardware to use.

ECC vs. non-ECC and AMD v. Intel. Thankfully this wasn’t nearly as controversial. Or my previous iteration could already be getting badmouthed behind my back on the FreeNAS forums for all I care. Instead there was merely some up-in-the-air questions about what supports ECC and what does not. First, Intel has a very featured archive where you can find all of their product specifications, including desktop processors that support ECC. All Xeons support ECC, but only a handful of their desktop processors do as well. Just make sure your motherboard supports ECC as well.

For AMD, the field is a little wider, but only so much. All Bulldozer processors support ECC — this includes the AMD FX lineup. And all Opteron processors, regardless of architecture, support ECC. They’re built for servers, so obviously they will. But from what I could discern, the only other classes of AMD processors that support ECC are the Phenom II and the K10-class Athon II.

So if you want to go with hardware that supports ECC, options are available off-the-shelf without having to go with server hardware. However, if you want to go server grade without spending a ton of cash, there are companies that sell refurbished workstations where you can get Xeon systems for pretty cheap, such as refurb.io and Arrow Direct, so shop around. eBay is always an option as well.

* * * * *

Backups and data integrity

So how can you detect when non-ECC RAM has corrupted your data? It’s quite easy, really, provided you’re consistently proactive.

One concept in data integrity and data security is the message digest, also called a hash or cryptographic hash. There have been several algorithms for this over the years, including the once-popular MD5 and SHA-1 algorithms. The computer security industry has currently settled on the SHA-2 standard as defined in NIST FIPS 180-4. The standard defines four secure hash algorithms: SHA-224, SHA-256, SHA-384, and SHA-512. Of these, SHA-256 is the most popular.

Publishing message digests with files for download has been a popular, though inconsistent practice in the open source community. Publishing the message digest provides a way to verify the file you downloaded is what the publisher intended and that the data didn’t get corrupted. Going along with that has also been the practice of PGP signing a text file containing the published message digests, further protecting that file against corruption and tampering.

It should hopefully be clear the direction I’m going with this. If you’re backing up files to a NAS, it can be worth your while to create a list of message digests for the files you are backing up for archival storage. Of the algorithms to use, I’d recommend SHA-256 simply because it is faster and widely used.

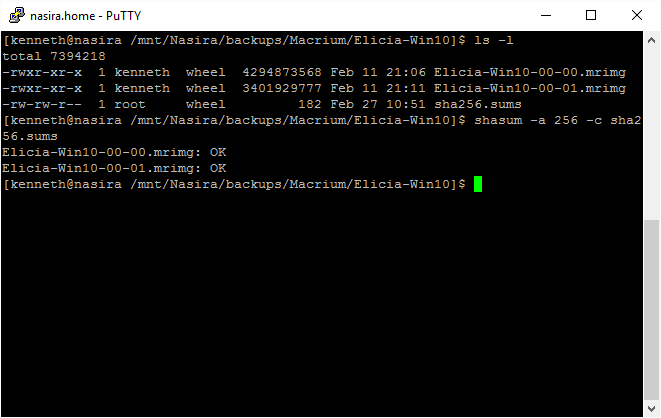

I personally use the sha256sum utility distributed with Cygwin. There are other utilities available, some of which are going to have a graphical user interface instead of being reliant on the command line. Ahead of copying the files to the NAS for archive, you’d want to create a text file in each folder and subfolder. This text file will list the SHA-256 digests for each file in that folder — let’s say it’s called sha256.sums:

sha256sum -b * > sha256.sumsThen once you have the files copied onto your FreeNAS box, you can verify the values listed in the files using the shasum utility (where [file] is the file containing the digests and filenames) that is installed with FreeNAS:

shasum -a 256 -c [file]

This will easily uncover whether some memory error has caused some data corruption since any message digests created on the source system will not match the message digests on the NAS. Okay that’s not 100% true, as there’s a slight, slight, slight, slight, slight, slight (highlights, copies, pastes that 100 more times…) chance that the data will be modified in such a way as to produce a hash collision, but you have a far, far, far, far, far, far (highlights, copies, pasts that 100 more times…) better chance of winning the lottery.

So regardless of whether you’re using ECC or non-ECC RAM, this is a way to verify that what you intend to archive is what FreeNAS actually writes to disk. If there’s any kind of memory or data corruption going on with your NAS, either due to fault memory or faulty hardware of some kind, this will in general uncover it, provided you do this consistently. You could then re-upload the failed file to see if the problem resolves itself, which if it’s one file that fails that should do the trick. If multiple files fail, something more serious is going on.

Speaking of backing up data…

* * * * *

Off-site “cloud” backups

If you have a NAS and you don’t have an off-site backup solution for backing it up, you really need to find one. There are a lot of options out there for backing up data “into the cloud”. I looked at quite a few. And many of the options weren’t really all that great for backing up servers. The service either didn’t support it, or didn’t support anything except Windows servers. And I’m not going to set up a Windows client just to synchronize backups with a cloud solution.

Of the options that support FreeBSD, the fee schedules can be rather confusing. Starting with Amazon Glacier.

Amazon Glacier. Not only is Glacier laden with confusing fees, they don’t provide a client for communicating with their service. Rather than provide the details, I’ll let someone else summarize it. Hashbackup is a third-party backup utility that supports, among other services, Amazon Glacier. But they’ve deprecated that support, and they’ve summarized quite well why and why you shouldn’t consider it as an option.

Google Cloud Storage. This one is a similar option to Glacier, and they provide three tiers of storage. Of what’s offered, the best storage price is their Nearline service, which charges .01 USD/GB (approximately 10 USD/TB), but they also charge .01 USD/GB for retrieval. Their other options don’t have retrieval fees, but do charge more for storage. They do have a free trial as well.

Rackspace Cloud Files. They are the most expensive of the ones I’ve considered at .10 USD/GB for the first TB, then .09 USD/GB after that till you get to 50 TB (which I’m not getting near). So that’ll be 100 USD for the first TB, and 90 USD/TB for each TB after that. And recovery is .12 USD/GB, or 120 USD/TB. Yikes! To their benefit, they do say they provide triple-redundancy, so perhaps that additional cost might be worth it. For me, for storing several TB of data, I’d rather buy another car for that monthly fee.

Dreamhost DreamObjects. They also appear similar to Google’s offering, but priced a little higher. They charge .025 USD/GB, twice that for retrieval, but they also offer pre-paid plans at a per-GB discount that’s still more expensive than Google, but still less expensive than Rackspace.

Backblaze B2 Cloud Storage. Backblaze has been in the news recently after a report they published about HDD reliability led to a class-action lawsuit against Seagate, and they are facing a class-action lawsuit for how they ship external hard drives. Which the ability to ship your data on an external drive as opposed to trying to download it all back to your NAS is certainly a nice feature.

Like Glacier, Backblaze does charge a per-transaction fee for their API calls, but it’s only .04/10,000 requests for downloads, and .04/1000 requests for everything else. Unlike Glacier, they have a very clear fee schedule, and a page on the account dashboard showing you a tally.

Ultimately I went with Backblaze for the time being since they also have a free tier providing 10GB of free storage. It’s more than enough for figuring things out. Google does provide a free trial, but it’s time limited as opposed to capacity limited. Same with Amazon Glacier — again, though, avoid that option — and Dreamhost. Rackspace doesn’t appear to offer any kind of trial.

Backblaze also provides their own Python scripts for interacting with their service. I played around with one called the B2 Python Pusher. It’s pretty straightforward in how it works. Only downside is it’s slow to upload data. 490MB worth of pictures took about 45 minutes. Their web interface with Firefox, though, took only about 15 minutes to upload the same files. I currently have about a half terabyte sitting on the NAS, so I need something that will be a bit faster.

I mentioned Hashbackup earlier. Let me just say the uploads are significantly faster, taking only about 4 1/2 minutes to upload about 490MB of data. It is a little interesting to set up, though, and you’ll need to get familiar with the command line to do so.

I’ll run a few more tests with it with regard to cron jobs and see what it can really do.

* * * * *

New chassis

So which chassis did I settle on? After a bit of thought, I opted for the IPC-G3550. I considered the longer chassis not for its 120mm fans, but out of concern for clearance with regard to the mobile rack. But after having the rack in hand and taking some measurements with it, and noting the measurements for the Sabertooth mainboard, I decided on the shorter chassis. It’s a little less expensive but still supports a full-size ATX power supply.

So let’s get to building. That’ll happen in the next iteration. For now, I’ve got a few other projects on my plate.