- Nasira – A FreeNAS build

- Nasira – Part II

- Nasira – Part III

- Nasira with a little SAS

- Moving Nasira to a different chassis

- When a drive dies

- Adding an SLOG… or not

- Coming full circle

- Throwing a short pass

- More upgrades but… no more NVMe

- Check your mainboard battery

I first built Nasira and put it into operation in early 2016. The initial build-out was a pair of 4TB WD Red drives. And based on an article I read at the time, I made a mental note to buy Seagate drives when purchasing the next pair of 4TB drives. That was in early March 2016.

On September 6, I got an alert from Nasira that one of those Seagate 4TB drives was showing errors and the drive was knocked offline. So that was about 5 1/2 years in service before a drive died.

So why am I writing about this? To go over what I should’ve been doing before the drive died to make the replacement process a little smoother, while discussing a few other things I’ve discovered or realized over the years I’ve had the NAS.

Drive inventory

This was the first mistake I made. Nasira is currently in a 4U chassis with 12 hot-swap drive bays. Now when you have that many drives, or just more than two, it’s a good idea to have a chart or spreadsheet saved off that looks somewhat like this:

| Bay | Name | Drive | Serial No. |

| 1 | da0 | WD Red 4TB | WD-WCC4XXXXXXXX |

| 2 | da1 | WD Red 4TB | WD-WCC4XXXXXXXX |

| 3 | da2 | WD Red 4TB | WD-WCC4XXXXXXXX |

| 4 | da3 | WD Red 4TB | WD-WCC4XXXXXXXX |

Noting the drive bay and connection name is important for immediate reference. That’ll prevent you from having to do what I did: shut down the NAS to pull drives to find the one to be replaced. Though shutting it down was a good idea anyway to mitigate the risk of another drive failure before I could get the dead one replaced.

Even if all your connections are in the same order as your drive bays – e.g. da0 is drive bay 1 – it’s still a good idea to do this. If you need to replace a drive, pulling up this chart and comparing it against the drive list in TrueNAS (or your NAS software of choice) should tell you at a glance which drive you need to replace.

Speaking of replacement drives…

Have drive replacements on hand

This was the second mistake I corrected. Well, in part.

In my instance, this isn’t nearly as crucial. Living in Kansas City, thankfully Micro Center has a nearby store where I readily picked up a replacement 4TB NAS drive. And I didn’t buy just one to replace the dead one. I bought two. The reason is twofold.

First, the Seagate 4TB drives were bought as a pair in the same order. This means they’re similar in age and probably from the same lot, so the chance of the second 4TB drive dying in the next couple months is substantially higher than for the WD Red 4TB drives. And having the second drive on hand also means that, when that happens, I already have the replacement on hand.

If you don’t live somewhere where you can just buy a new drive the same day, you should have replacements on hand. And even if you do, you should have drive replacements on hand just in case the place where you normally would be able to get ahold of one is out of stock the day you need it.

For mirrored pairs, ideally you should have at least 1 drive for each pair. If all your drives are the same capacity, then just keep a few spares on hand. If you have a setup like mine, with a mixture of drive capacities, try to keep one of each capacity on hand. For my setup, this would mean one each of 4TB, 6TB, 10TB, and 12TB.

For RAID-Zx configurations, the minimum you should keep on hand is the minimum number of drives you can safely lose based on your configuration. So if your drives are configured in a RAID-Z2 configuration (e.g. RAID-6), have at minimum 2 spares on hand for each Z2 you have. And the need to have this on hand is even more critical if all your drives are similar age or came from the same lot. Since once one drive dies, the likelihood of another going with it within a short period of time goes up exponentially.

Resilvering time

I said in my first article that rebuild time was the primary reason to go with mirrored pairs. When you lose a drive, obviously you want your array back online as fast as possible, if for nothing more than getting it online before another drive fails. Since the amount of time it takes to rebuild a dead drive will depend on the read and write speeds of all drives in the pool and the pool’s topology.

As SSDs come down in price more and more, they will displace HDDs in a NAS for this reason. (As of this writing, 4TB 2.5″ SSDs are about 380 USD, about the same price of a 10TB or 12TB HDD.)

With any RAID-Zx configuration, parity data needs to be recalculated and blocks need to be rebuilt from parity. This will slow down the resilver a lot simply due to the amount of data that must be read per block that will be written back out. More drives or more data = more time required.

With mirrored pairs, it’s just a straight copy. Read and write.

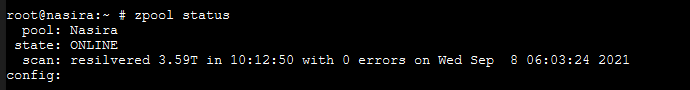

The 4TB drive I replaced is near full. The resilver took over 10 hours to copy about 3.59TiB of data. (For reference, TrueNAS reports 3.64 TiB as the drive capacity.) That works out to an average rebuild speed of about 102MiB per second, or about what could be expected for platter HDDs.

Hardware choices

But it’s with resilvering and scrub times that hardware choices in your NAS will make all the difference. Regardless of what HDDs you use, don’t expect more than about 100MB/s on average for read and write speeds. This means your performance differences will come down to how those drives are connected.

So rule #1 with a DIY NAS: avoid using the SATA connections on your mainboard. Instead just buy a SAS-to-SATA HBA controller and connect everything up to it. Your cabling will be cleaner as it replaces 4 individual SATA cables with 1 SAS to SATA cable.

And the processor onboard the controller card will also take some of the load off the CPU. This will mitigate the read penalty that comes with scrubbing and resilvering RAID-Zx pools. The read portion is more important for resilvering RAID-Zx pools more so than the write. Since the faster the system can read data in parallel from each drive in the pool, the faster it can calculate whatever it needs to write out to the drive being rebuilt.

It won’t be anywhere near what you could expect for mirrored pairs, but it’ll still give you the best resilver and scrub times.

And rule #2 is to use hot-swap bays and ensure your system is properly configured for them. If you’re using an HBA card, you should already be set on this. If you’re using your mainboard’s SATA connections (again, avoid doing this), you will need to go into your BIOS to ensure the hotswap is enabled for each port. This way you don’t need to shut down your NAS to replace a drive.

You must be logged in to post a comment.