- 10 gigabit (10Gb) home network – Part I

- 10 gigabit (10Gb) home network – Part II

- Again, Amazon?

- 10 gigabit (10Gb) home network – Zone 1 switch

- 10 gigabit (10Gb) home network – Zone 2 switch – Part 1

- 10 gigabit (10Gb) home network – Zone 2 switch – Part 2

- 10Gb home network – Retrospective

- Quanta LB6M

- 10 gigabit home network – Summary

- Revisiting the Quanta LB6M

- MikroTik CRS317 10GbE switch

- MikroTik CSS610

- Quieting the MikroTik CRS317

- Goodbye, MikroTik

- Troubleshooting 2.5Gb power over Ethernet

With the first zone effectively done, it was time to plan the second switch. The requirements here are a little more involved than the Zone 1 switch:

- 10GbE uplink to Zone 1

- 2x10GbE connections for Mira and Absinthe

- Multiple 1GbE connections with Auto-MDIX

- Wireless support to create a hotspot

To this end, this is the main system hardware:

- CPU: AMD FX-8350

- Mainboard: Gigabyte 990FXA-UD3

- Memory: 2x4GB DDR3

- Storage: SanDisk Cruzer Fit 16GB USB 2.0

- Graphics: nVidia GeForce2 MX400 PCI

Networking hardware:

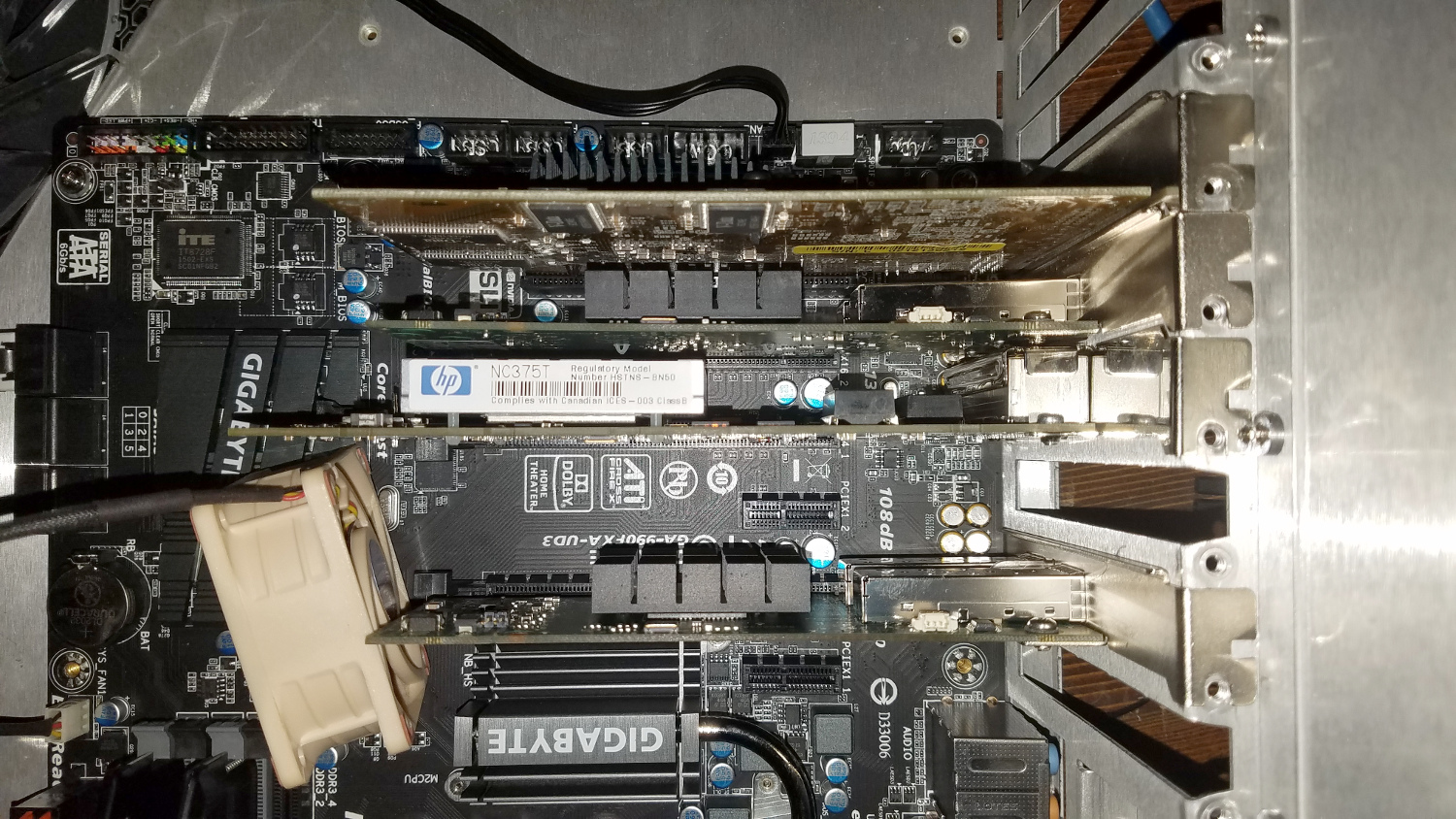

- 10GbE: 2xMellanox ConnectX-2 (MNPH29-XTR)

- Transceivers: Fiber Store Generic 10GBase-SR

- Gigabit: HP NC375T

- Wireless: TP-Link AC1900

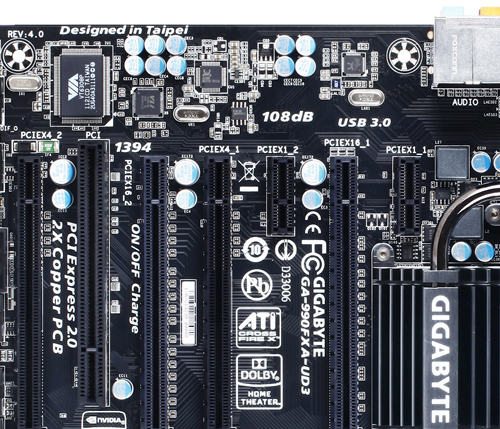

The mainboard has a PCI-Express configuration to support this setup. The 990FXA-UD3 mainboard has two each of x16, x4, and x1 PCI-Express 2.0 slots, which would support this configuration:

- x16 – Mellanox ConnectX-2

- x1 – TP-Link AC1900

- x4 – Quad-port Gigabit

- x16 – Mellanox ConnectX-2

- PCI – GeForce2 MX400

Mmm…. look at all those expansion slots, just waiting to have something… inserted into them.

And for this switch I’ve opted to use an old PCI graphics card, a GeForce2 MX400. I think that chipset came out around the time my oldest niece was born (she’s 15 as of when I write this). I bought it when I was still in college as an upgrade for a Riva TNT AGP card, opting for the PCI version since it was less expensive than the AGP version when I bought it. The PCI card will keep the last x4 slot open.

And for this switch I’ve opted to use an old PCI graphics card, a GeForce2 MX400. I think that chipset came out around the time my oldest niece was born (she’s 15 as of when I write this). I bought it when I was still in college as an upgrade for a Riva TNT AGP card, opting for the PCI version since it was less expensive than the AGP version when I bought it. The PCI card will keep the last x4 slot open.

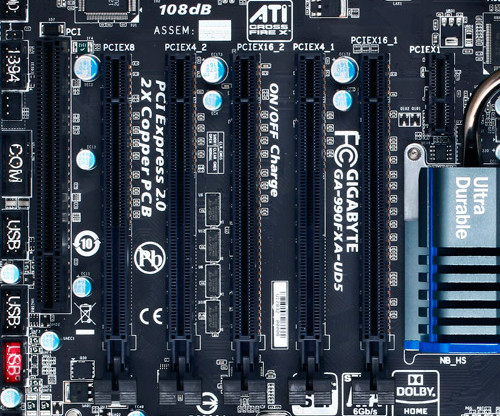

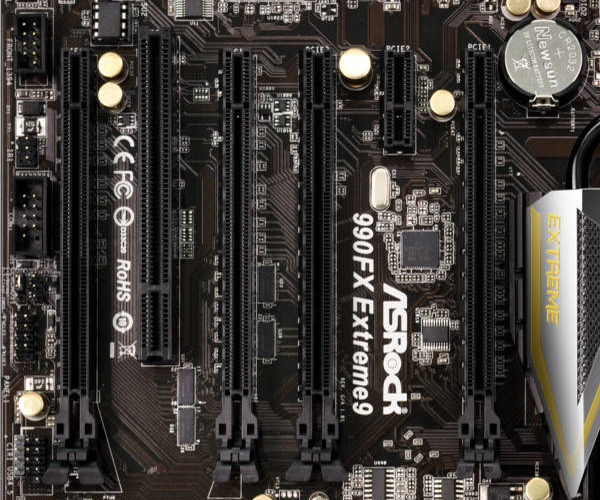

If I needed three dual-port 10GbE cards, I could’ve used the Gigabyte 990FXA-UD5. It has a primary x16 slot and two x8 slots while still having two x4 slots, a x1, and a PCI slot. The position of the x1 slot limits you to short cards. The ASRock 990FX Extreme9 has a similar slot configuration but only one x4 slot as it has 6 slots overall. But the x1 slot is better positioned for longer cards, such as the intended AC1900.

For cables and transceivers, I went back to Fiber Store. This time the order was for six (6) 10GBase-SR transceivers and three LC to LC OM4 optical fiber cables: two 10m cables for connecting Mira and Absinthe to the switch, and a 30m cable for connecting Zone 2 to Zone 1.

Intel PRO/1000

One lesson I learned in this is to not use the Intel PRO/1000 chipset Ethernet adapters. In doing some research, I found one comment on Amazon that alludes to this chipset not supporting anything other than PCI-E 1.0. A Reddit thread alludes to the same. So if your mainboard can downgrade specific slots to older PCI-E standards, you may be good, but it’s no guarantee.

In the case of the 990FX, you’re out of luck. It wouldn’t light up for me, and under Linux would not show up in the lspci device listing. I’ll try it later with one of the Athlon X2 boards I have to see if it’ll light up there, though I’m not sure what I’d do with it if it does. Perhaps use it to create a master for a small cluster.

So if you’re going to look for a quad-port Ethernet card, stay away from the Intel PRO/1000 PT cards you can find all over eBay unless you can confirm compatibility with the mainboard you’re intending to use.

Buying surplus retired server hardware can come with a few gambles. And apparently with some chipsets, you need to be aware of Chinese fakes.

Mellanox ConnectX-2

A lot of Mellanox cards you’ll find on the market are OEM cards, so compatibility with the Mellanox drivers may not be guaranteed across all platforms. The listings should have the part number in the title or somewhere in the body to allow you to research. Unfortunately information on specific part numbers can be sparse. Thankfully you’re likely to find specific part numbers on any sale listings.

Look for the Mellanox-specific model numbers to ensure the greatest chance of getting ones that will work: MNPH29-XTR for a dual-port ConnectX-2 card, or MNPA19-XTR for a single-port. On the Zone 1 switch, I mentioned another part number that saw success: 81Y1541, which is a dual-port ConnectX-2 OEM-branded by IBM.

Part number 59Y1906, also OEM-branded IBM, gave me nothing but trouble. The Mellanox EN driver for Fedora 24 refused to do anything with either card. The default mlnx4_core driver that comes with Fedora 24 and the latest kernel continually displayed error messages to the screen about a command failing. Installing the Mellanox EN driver only made things worse. And all of the Mellanox tools for querying the device returned the error code MFE_UNSUPPORTED_DEVICE.

Despite the A1 sticker on the card, all utilities that could read the data from the card showed the chip revision to be A0. And that I think is the reason the Mellanox utilities refused to support it.

Interestingly they did work under Windows 10 with the latest Mellanox WinOFED driver (WinOF 5.22). Or at least they weren’t giving me errors continually. If I had both cards plugged in, though, one would fail to operate with Windows reporting a Code 43. I think the problem there might have been the fact it was not Windows Server, and I didn’t try them with Windows Server.

So if you obtain that part number, be aware that you may not be able to use it under Linux, but you should be able to use it under Windows. Just make sure to install the latest WinOFED driver to get all the configuration features that are available. The command-line utilities under Windows also reported them as being unsupported even though the drivers appeared operational.

There may be other part numbers that may or may not work, so do some quick research before buying to save yourself the headache I’ve endured.

Blending in

Given this one will be near our entertainment center, I opted toward an HTPC chassis to blend in. Specifically I went with the Silverstone GD09.

I’m not too fond of the potential airflow options. But this chassis actually has an expansion slot situated above the other expansion slots:

A rather interesting position. And actually the perfect position for a slot bracket for fans, such as what you can find on modDIY. The grill is wide enough for an 80mm fan, but too slim for anything larger. A better option is using expansion slot fan mounts that mount above the cards, such as this other one from modDIY (check eBay for better prices), to mount a pair of 60mm or 70mm fans above the cards to take advantage of the width of the vent for overall better airflow.

And the fan positions over the mainboard I/O are 80mm. All other fan positions are 120mm. The cards on the test bench show as well how important cooling will be for this setup.

And while the cross flow isn’t the greatest on the Silverstone GD09, there are ways of maneuvering the air where I need it. Specifically I may be able to use the 120mm fan mount that is adjacent to the power supply as an intake with a duct (such as this one from Akust) to direct air onto the cards.

Continuing…

That’s it for now. I’m waiting for the last of the hardware to arrive from Fiber Store.

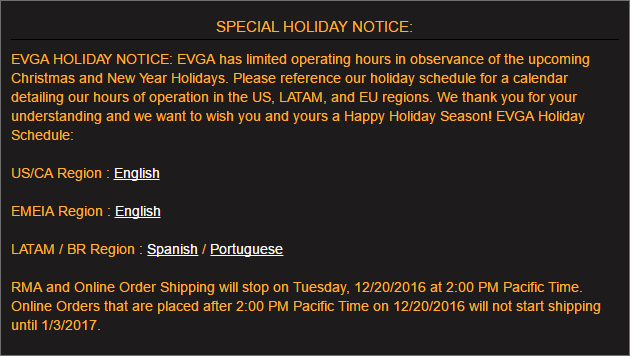

The power supply I have planned for this is also an RMA I’m waiting to receive from EVGA. Unfortunately they aren’t going to resume any shipments until January 3, 2017. I may shortcut that and just buy another power supply from Micro Center, since I also still need to buy the USB drive. We’ll see. But for now this is where I’ll leave it.

You must be logged in to post a comment.