If you’re seeking to get involved in anything computer-related with the intent of making it a career, two concepts with which you really need to be familiar are virtualization and containerization. The latter is relatively new to things, but virtualization has been around for quite a while.

VMware made virtualization more accessible, releasing their first virtualization platform almost 20 years ago. Almost hard to believe it’s been that long. I started fooling around with a very early version of VMware back when I was still in community college.

And it’s why they are basically the name for virtualization. But they are not the only name.

In my home setup, I have a virtualization server that is an old HP Z600 I picked up a couple years ago. A dual-Xeon E5520 (4 cores/8 threads per processor) that I loaded out with about 40GB RAM (it came with 8GB when I ordered it), a Mellanox 10GbE SFP+ NIC, and a 500GB SSD. The intent from the outset was virtualization. I wanted a system I could dedicate to virtual machines.

Initially I put VMware ESXi on it. Simply because it was a name I readily recognized and knew. The free version you can readily download online after registering a VMware online account. First, let’s go over the VMs I had installed:

- Docker machine: Fedora 27, 4 cores, 8GB RAM

- Plex media server: Fedora 27, 2 cores, 4GB RAM

- Backup proxy: Fedora 27, 2 cores, 2GB RAM

- Parity node: Ubuntu Server 16.04.3 LTS, 4 cores, 8GB RAM

All Fedora 27 installations use the “Minimal Install” option from the network installer with “Guest Agents” and “Standard” add-ons.

My wife and I noticed that Plex had a propensity to pause periodically when playing a movie, and even when playing music. I didn’t think Plex was the concern, but rather the virtual machine subsystem. Everything is streamed in original quality, so the CPU was barely being touched.

And my NAS certainly wasn’t the issue either. Playing movies or music directly from the NAS didn’t have any issues. So if Plex’s CPU usage was nowhere near anything concerning, this points to virtualization as the issue. The underlying VMware hypervisor.

This prompted me to look for another solution. Plus VMware 6.5’s installation told me the Z600’s hardware was deprecated.

Enter Proxmox VE.

I’ve been using it for a few weeks now, and I’ve already noticed the virtual machines appear to be performing significantly better than on VMware. All of them. Not just Plex – the intermittent pausing is gone. Here’s the current loadout (about same as before):

- Docker machine: Fedora 27, 4 cores, 4GB RAM

- Plex media server: Fedora 27, 2 cores, 4GB RAM

- Backup proxy: Fedora 27, 2 cores, 2GB RAM

- Parity node: Ubuntu 16.04.3 LTS, 4 cores, 16GB RAM

A note about Parity: it is very memory hungry, hence why I gave it 16GB this round instead of just 8GB (initially I gave it 4GB). Not sure if it’s due to memory leaks or what, but it seems to always max out the RAM regardless of how much I give it.

Plex at least I know uses the RAM to buffer video and audio. What is Parity doing with it?

Proxmox VE out of the box has no limitation on cores either. They don’t limit system use to get you to buy a subscription. So it will use all 16 cores on the Z600. Though according to the specification sheet, it’ll support up to 160 CPU cores and 2TB of RAM per node. And it’s free to use.

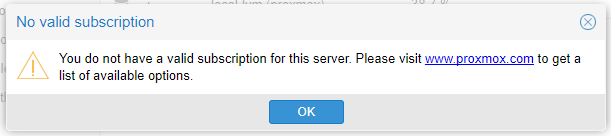

It will, however, nag you when logging into the web interface if you don’t buy a support subscription. And you’ll see error messages in the log saying the “apt-get update” command failed — since you need a subscription to access the Proxmox update repository. But you can disable the Proxmox repository to keep those error messages from showing up, and there are tutorials online about removing the nagging message for not having a subscription.

The lowest cost for that subscription, as of this writing, is 69.90 EUR per physical CPU, not CPU core. So in a dual-Xeon or dual-Opteron, it’d be shy of 140 EUR (~170 USD) per year. Quad-Xeon or Quad-Opteron servers would be shy of 280 EUR (~340 USD). Which isn’t… horrible, I suppose.

The base system is built around Debian Linux and integrates KVM for virtualization. Which basically makes Proxmox a custom Debian Linux distribution with a nice front-end for managing virtual machines. Kind of how Volumio for the Raspberry Pi is a custom Raspbian Jessie distribution.

It also supports LXC for container support. Note that LXC containers are quite different from Docker containers, though there have been several requests to integrate Docker support into Proxmox VE. Which would be great if they did, since that would eliminate one of my virtual machines altogether. But I doubt they’ll be able to cleanly support Docker given what would be involved — not just containers, but volumes, networks, images, etc.

The only hiccup I’ve had with it came while installing Proxmox. First, I had to burn a disc to actually install it. Attempting to write the ISO to a USB drive didn’t work out. Perhaps I needed to use DD mode with Rufus, but following their instructions didn’t work.

It also did not support the 10GbE card during installation, so I had to re-enable the Z600’s onboard Gigabit port to complete the installation with networking support properly enabled. Once installed, it detected the 10GbE card, and I was able to add it into the bridge device and disconnect the Gigabit from the switch.

This machine will also soon be phased out. I don’t have enough room on this box to set up other virtual machines that I’d like to run. For example, I’d like to play around with clustering — Apache Mesos, Docker Swarm, perhaps MPI. So this will be migrated to system with dual Opteron 6278 processors on an Asus KGPE-D16 dual-G34 mainboard, which supports up to 256GB RAM (128GB if using unregistered or non-ECC).

I’ll be keeping this system around for a while still, though, since it does still have some use. It’s just starting to really show its age.

You must be logged in to post a comment.